Introduction

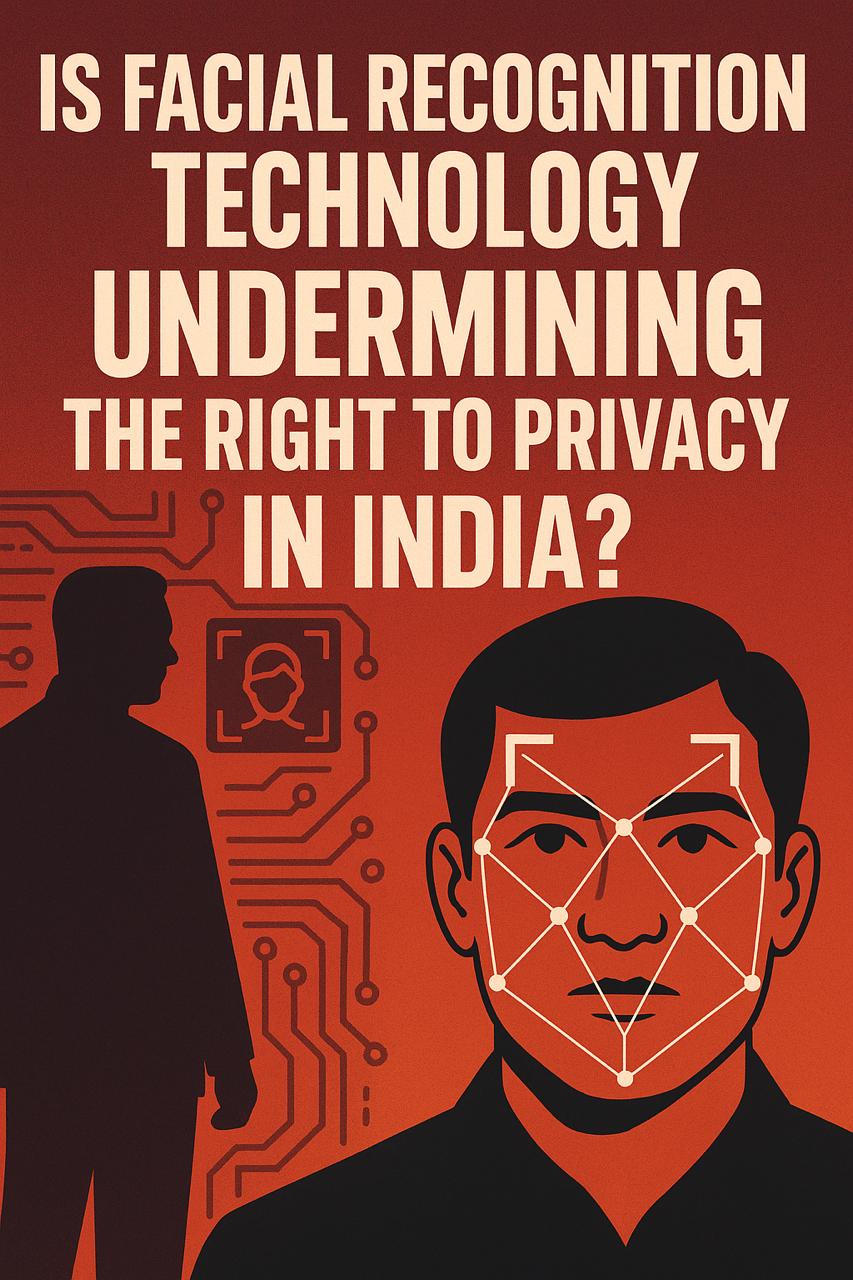

Facial Recognition Technology (FRT) is becoming more common in India, being utilized for everything from policing to airport security and even administrative tasks. While it certainly brings about greater efficiency and can enhance public safety, the way it’s being rolled out without proper regulations raises some serious questions about our individual privacy. In a nation where people are still getting used to the digital landscape and where data protection laws are just starting to take shape, can we really afford to embrace FRT without solid legal protections in place?

The Technology and Its Expanding Use

FRT identifies or verifies individuals based on facial features using biometric software. It is being integrated into surveillance systems at railway stations, airports, and public gatherings. Government programs like the National Automated Facial Recognition System (NAFRS) aim to centralize facial data to aid law enforcement.

Although the intention is to enhance public safety and identify criminals swiftly, there is a significant lack of transparency. Citizens are rarely informed when or how their facial data is being captured or used. The absence of informed consent makes such data collection potentially unconstitutional.

The Privacy Debate Post-Puttaswamy Judgment

The right to privacy was established as a fundamental right by the Supreme Court in Justice K.S. Puttaswamy v. Union of India (2017). The judgment laid down a threefold test for any restriction on privacy: it must be legal, necessary, and proportionate.

Most facial recognition programs in India do not meet these requirements. There is no specific law authorizing such surveillance. Its necessity in a democratic society is questionable, especially when used indiscriminately. And without public oversight or impact assessment, proportionality is hard to justify.

Risks and Rights in Conflict

There are serious concerns about misuse, wrongful profiling, and algorithmic bias in FRT systems. Global studies have shown that these systems are often less accurate for women and people with darker skin tones. Errors can lead to wrongful detentions, discrimination, and harassment.

Moreover, the recently enacted Digital Personal Data Protection Act, 2023, does not specifically regulate FRT. It also grants broad exemptions to government agencies, which undermines accountability. In effect, this weakens the citizen’s control over their own biometric data.

Conclusion

The description of Abetment of Suicide law in Section 306 IPC basically describes the behaviour of abetting someone being encouraged when they might take their own life. In the IPC, it states that if a person has assisted another to take their own life then the person assisting can be charged and subjected to imprisonment of greater than ten years with a fine. In order for a prosecutor to succeed at convicting a person under this section it must be proven that beyond a reasonable doubt that (1) the client actually took their own life, (2) the accused had the mens rea to assist, instigate, or encourage such an act, and (3) the accused verbally and physically assisted in the process of taking their own life.

About the Author:-

Bhawya is currently working on a PhD in Law – Corporate Law at Apex Law University. A keen interest in cybercrime and technology crossover with the law, Bhawya’s academic research looks at the issues created for modern legal regimes with the introduction of AI, particularly regarding women’s rights and abuse via digital mediums in India.